Unlocking the Spoken Word: A Deep Dive into Automatic Speech Recognition (ASR) in the Fearless Steps Project

In our increasingly connected world, human-machine interactions through speech have become a cornerstone of modern technology. To elevate these interactions, extracting meaningful information from audio signals is crucial, and that’s where speech processing steps in. The “From SAD to ASR on the Fearless Steps Data” project, undertaken by researchers at Paderborn University, delves into the Fearless Steps – 02 (FS-02) Corpus derived from NASA’s Apollo space mission to build an autonomous system. This ambitious system is broken down into three key sub-tasks: Speaker Activity Detection (SAD), Speaker Identity Detection (SID), and Automatic Speech Recognition (ASR).

What is ASR and Why Does it Matter?

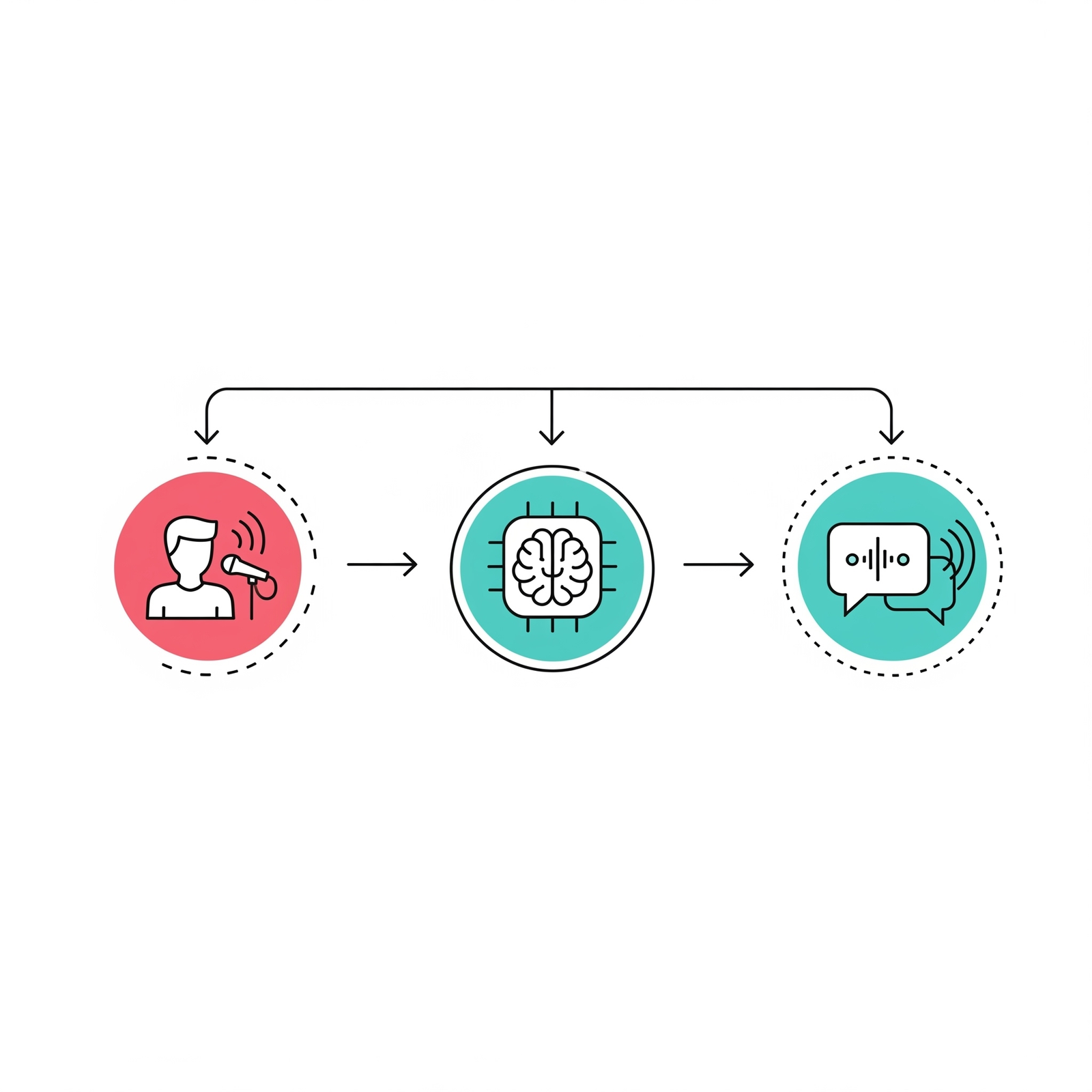

At its heart, Automatic Speech Recognition (ASR) is the technology that allows human beings to use their voices to speak with a computer interface, which can then interpret and transcribe the speech content. The primary objective of this project’s ASR component is to accurately transcribe the detected speech content. This is a sequential problem where the system generates a sequence of output transcription from an input sequence of acoustic features.

From Traditional to End-to-End ASR

Traditionally, speech recognition systems were complex, relying on multiple components such as Hidden Markov Models, Gaussian Mixture Models, Deep Neural Networks, and n-gram language models. However, this project leverages the power of end-to-end speech recognition systems, which replace these distinct components with a single, end-to-end deep recurrent neural network. This modern approach streamlines the process by trying to guess each letter, combining them into words, and then sentences, making effective use of attention mechanisms.

The ASR Workflow: Decoding the Voice

The ASR process within the project involves several main stages:

- Feature Extraction: The initial step involves converting the input speech signal into an acoustic feature, primarily using Mel-Frequency Cepstral Coefficient (MFCC) bands. This transforms the speech signal into an 80-dimensional feature vector.

- Acoustic Model (Encoding): This part is responsible for generating a mathematical representation of the acoustic features, capturing the relationship between an audio signal and linguistic units (like phonemes). The encoder transforms the speech feature sequence into a hidden representation.

- Decoding: In this final stage, the model transcribes the audio clips into words. It relies heavily on both the acoustic model and a Language Model (LM), which assigns probabilities to sequences of words, helping to distinguish similar-sounding words and phrases.

The Power of the Transformer Architecture

The project’s ASR task primarily employs the Transformer architecture. This architecture is chosen because it uses attention to boost training speed and completely relies on self-attention for converting input sequences to output sequences, thereby avoiding recurrence issues found in LSTMs.

Key components of the Transformer model include:

- Input Embedding and Positional Encoding: Before being fed into the encoder, input audio signals (tokens) are mapped onto vectors (embeddings), and positional encoding injects information about the relative or absolute position of tokens.

- Encoder: Consisting of multiple identical units, the encoder transforms the acoustic features into a hidden representation. It includes multi-head self-attention and position-wise fully connected feed-forward layers.

- Decoder: Also comprising multiple identical units, the decoder generates the output sequence (characters) one at a time, using previously emitted characters as additional inputs. It has an additional multi-head attention subunit over the encoder’s output.

- Attention: This mechanism allows the model to focus on relevant parts of the speech. Self-attention relates different positions of input sequences to compute representations, while cross-attention uses information from both the encoder and decoder to calculate attention scores.

Incorporating Speaker Identity: Speaker Adaptation

A significant aspect of this project is the effort to incorporate speaker information into the ASR model to improve performance. This concept, known as speaker adaptation, aims to minimize the mismatch between evaluation data and trained models, potentially reducing the Word Error Rate (WER). The project explored three main implementations using one-hot speaker vector encoding:

- Concat Model: In this approach, the one-hot speaker vectors are concatenated directly with the acoustic features before being sent to the acoustic model for training. This increases the feature dimension, for example, from 80 to 243 dimensions (80 acoustic + 163 speaker dimensions).

- Embed Model: Here, the one-hot vectors are linearly projected to match the encoder’s dimension (256) and then added after the convolution layer.

- Encode Model: This implementation involves adding the linearly projected speaker vectors to the final output of the encoder.

The Fearless Steps – 02 Dataset: Voices from Apollo 11

The project relies on the Fearless Steps – 02 (FS-02) Corpus, derived from NASA’s Apollo 11 mission communications. This dataset provides long recordings with multiple speakers and varying silences, proving ideal for designing robust speech recognition systems. For the ASR task, audio segments are used, which are short-duration speech sections from the audio streams. A challenge with this data is the presence of single-word utterances shorter than 0.2 seconds. To avoid issues like speaker mismatch and short utterances impacting training, the researchers ensured the same set of speakers in both training and development datasets and removed utterances with fewer than 3 words from the training data. The project also used language models trained on FS-02 and Wall Street Journal (WSJ) data.

Measuring Success: Performance and Challenges

The performance of the ASR model is primarily evaluated using Word Error Rate (WER) and Character Error Rate (CER). WER calculates the number of insertions (added words), substitutions (incorrect words), and deletions (omitted words) needed to transform the model’s output into the reference text.

Initial evaluations of the Baseline Transformer model showed WERs of 41.3% without a language model, improving to 39.9% with the Fearless language model and 36.8% with data modification.

For the modified architectures incorporating speaker information, the results were varied:

- Concat Model: WER of 92%.

- Embed Model: WER of 89%.

- Encode Model: WER of 33%.

The Concat and Embed models underperformed, overfitting to the development dataset. This was primarily attributed to severe class imbalance and utterance-speaker disproportion within the dataset, meaning some speakers had a very high number of utterances while more than half had fewer than 25, causing the model to learn rapidly for dominant speakers.

However, by reducing the learning rate by half for the Encode model, it achieved the best result with a WER of 33%, significantly outperforming the Baseline model. This demonstrates the effectiveness of incorporating speaker information when hyperparameters are appropriately tuned.

The Road Ahead for ASR

To further enhance the ASR system, the project report suggests crucial avenues for future work:

- Further optimizations in data manipulations and augmentations for the integrated sub-systems.

- Crucially, incorporating the speaker representations learned from the SID task into the ASR model is hypothesized to further improve results. This suggests a powerful synergy between the project’s distinct sub-tasks.

In conclusion, the ASR component of this project showcases the successful application of deep learning, particularly the Transformer architecture, in transcribing complex audio data from the Apollo missions. While challenges like data imbalance exist, the demonstrated improvements with speaker adaptation pave the way for more robust and accurate speech recognition systems.